When Automation Becomes Enforcement

What we talk about when we talk about interoperable end-to-end encryption

I was wrong about Snapchat, but I was also kinda right.

When I first encountered the idea of disappearing messages, I was both skeptical and alarmed.

Skeptical because disappearing messages have an obvious defect as a security measure: If I send you a message (or a photo) that I don’t want you to have, I lose. You can remember the contents of the message, or take a screenshot, or use a separate device to photograph your screen. If I don’t trust you with some information, I shouldn’t send you that information.

I was wrong.

Not wrong about sending information to untrusted parties, though. I was wrong about the threat model of disappearing messages. I thought that the point of disappearing messages was to eat your cake and have it too, by allowing you to send a message to your adversary and then somehow deprive them of its contents. This is obviously a stupid idea.

But the threat that Snapchat — and its disappearing message successors —was really addressing wasn’t communication between untrusted parties, it was automating data-retention agreements between trusted parties.

Automation, not enforcement

Say you and I are exchanging some sensitive information. Maybe we’re fomenting revolution, or agreeing to a legal defense strategy, or planning a union. Maybe we’re just venting about a mutual friend who’s acting like a dick.

Whatever the subject matter, we both agree that we don’t want a record of this information hanging around forever. After all, any data you collect might leak, and any data you retain forever will eventually leak.

So we agree: After one hour, or 24 hours, or one week, or one year, we’re going to delete our conversation.

The problem is that humans are fallible. You and I might have every intention in the world to stick to this agreement, but we get distracted (by the revolution, or a lawsuit, or a union fight, or the drama our mutual friend is causing). Disappearing message apps take something humans are bad at (remembering to do a specific task at a specific time) and hand that job to a computer, which is really good at that.

Once I understood this, I became a big advocate for disappearing messages. I came to this understanding through a conversation with Nico Sell, founder of the disappearing message company Wickr, and it made so much sense I volunteered on her advisory board. (Disclosure: Wickr was eventually sold to Amazon, and I received a small and unexpected sum of money when this happened; I no longer have any financial interest in the company or any of its competitors.)

Enforcement, not automation

But as much as I was wrong about disappearing messages, I was also right.

From the start, I understood that there were lots of people who saw disappearing message directives as a tool for enforcing agreements, not just automating them.

That’s a distinction with a difference. If you and I agree to disappearing messages as a means of automating our agreement, then we have to truly, freely agree, without any coercion. If you’re my boss and you send me an instruction to commit a crime, you can set the “delete after one hour” bit, but I can ignore it and retain the message and turn it over to the FBI.

By contrast, enforcing the disappearing message bit is, by definition, coercive, and in a way that goes well beyond the mere act of deleting your message.

If a disappearing message bit is to be an enforcement tool, then there must be a facility built into your computer that causes it to ignore your instructions when a remote party countermands them.

A computer that allows third parties to override its owner’s instructions is a Bad Idea. It’s a system that — by design — runs programs the owner can’t inspect, modify, or terminate.

It’s a nightmarish scenario that we’ve been fighting over since the early days of DRM, and it’s a fracture-line that’s only widened over the years. Today, everyone wants to give your computer orders that you can’t override: stalkerware companies, cyber-arms dealers, bossware companies, remote proctoring companies, printer companies, and many others.

They all have different rationales and ideologies, but all of these actors share a common belief: that your computer should be designed to override your commands when a third party countermands them.

This is not normal

The obvious danger from any of these applications is that a computer that is designed to ignore your instructions when the right third party gives it orders might be fooled by the wrong third party. It’s the same risk that comes from putting police overrides in security systems: not just that a dirty cop might abuse this facility, but that a criminal might impersonate a cop and abuse it.

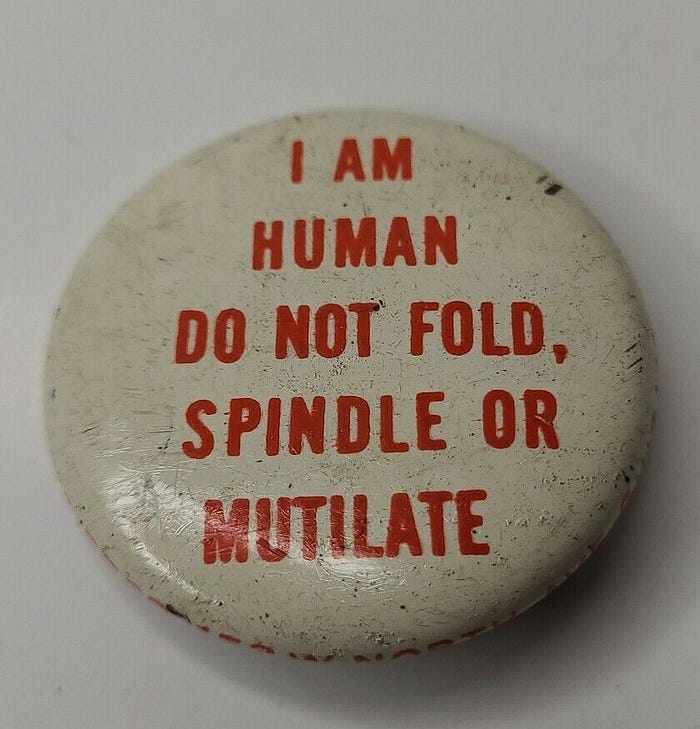

But beyond this danger is a subtler — and more profound — one. We should not normalize the idea that our computers are there to control us, rather than to empower us.

This is an old, old fight.

The struggle over automation has always been about control, since the Luddites themselves. The question of whether the computer serves you or another — the government, a corporation, a ransomware gang or a botmaster — is the central question of digital rights.

It’s a question with enormous relevance to interoperability. In the mid-2000s, Microsoft rolled out a pair of technologies that had profound implications for competition, users’ self-determination, and digital rights: trusted computing (a way for third parties to determine which software your computer was running, even if you objected) and “Information Rights Management” (DRM for Office files, sold as a way to prevent the people you send Word or Excel files to from capturing the information in them).

Bill Gates touted these new features as a means of securing all things, including medical records. His go-to hypothetical was: This will prevent your doctor’s office from leaking the fact that you are HIV-positive.

Many of us pointed out that this was nonsense. First, the fact that you are HIV-positive is a single bit of information, which means that a malicious insider at your doctor’s office need only remember one fact in order to compromise you, IRM or no.

More importantly, we pointed out that this would allow Microsoft to block the interoperable Office suites that were helping users escape from Windows’ walled garden — programs like iWork (Apple) and the free OpenOffice suite.

Even back then, there was (perhaps deliberate) confusion about whether IRM was a tool for automation or enforcement. At the time, I had an email exchange with the head of Microsoft’s identity management program (an old friend who I had once worked for, at his previous company) where he tried to persuade me that I was wrong to impute sinister, anticompetitive motives to Microsoft.

My correspondent insisted that IRM was an automation tool — a way for the sender of an Office document to reliably remind the recipient of their expectations for it. Yes, my friend said, you could screenshot the data, or copy it down by hand, or break it with a third-party program, but that wasn’t the point. The point was to “keep honest users honest.” (“Keep honest users honest” is the rallying cry of all DRM advocates; as Ed Felten quipped, that’s like “keeping tall users tall.”)

So I called his bluff. I said, “Right now, you try to print an IRM-locked document I sent you, it displays a message saying ‘Cory Doctorow has prohibited printing of this document. [CANCEL]’ If this is about automation and not enforcement, why not just add an [OVERRIDE] button, right next to that [CANCEL] button?”

My friend hemmed and hawed and misdirected, but eventually he admitted what we both knew: No enterprise customer would buy IRM if it came with an override button. Everyone who bought IRM considered it an enforcement mechanism, not an automation tool.

Right-clicker mentality

People who want to control you with your computer put a lot of energy into blurring the line between enforcement and automation.

Take NFT advocates. A common refrain in that market is that NFTs obviate the need for DRM, since they establish “ownership” via permanent, unalterable blockchain receipts. With NFTs (they say), we can get past the ugly business of third parties controlling your computer to protect their rights.

But this rhetoric pops like a soap bubble the instant people start saving boring apes to their hard-drives. Then, NFTland erupts in a condemnation of “right-clicker mentality.”

This isn’t limited to Web 1 (Microsoft) and Web3 (NFTs); Google (Web 2.0) got into this in a big way in 2018, when it rolled out Information Rights Management for email, billing it “Confidential mode.”

And, as with every other type of IRM, Google deliberately blurred the line between automation and enforcement, even parroting Bill Gates’s rhetoric that this would somehow prevent information that you sent to untrusted third parties from leaking.

Enter the DMA, pursued by a bear

Much of the time, the blurred automation/enforcement distinction doesn’t matter. If you and I trust one another, and you send me a disappearing message in the mistaken belief that the thing preventing me from leaking it is the disappearing message bit and not my trustworthiness, that’s okay. The data still doesn’t leak, so we’re good.

But eventually, the distinction turns into a fracture line.

That’s happening right now.

The EU’s Digital Markets Act (DMA) is on the march, and poised to become law. When it passes, it will impose an interoperability requirement on the largest tech firms’ messaging platforms, forcing them to allow smaller players to choose to connect with them. This will have a powerful impact on tech monopolies, which rely on high switching costs to keep their users locked in: It will let you leave a platform but stay connected to the friends, communities, and customers who stay behind.

The rule won’t take effect for several years, though, so that technical experts can work out a way to safeguard end-to-end encryption between big platforms and new interoperators.

Some security experts have raised concerns that this will weaken encryption, and this is a very important point to raise. End-to-end encryption is key to securing our digital human rights. Governments have been waging war on it since the 1990s, and they’re still at it.

But while the security community agrees that we mustn’t let interoperability compromise user security, if you read carefully enough, you’ll see that there is a profound disagreement about what we mean when we say “user security.”

My computer, my rules

For example, many DMA skeptics point out that interoperability is unlikely to preserve disappearing messages.

But that’s only true if you think the point of disappearing messages is enforcement, not automation.

Remember, you can’t be “secure” in some abstract way. You can only be secure in a specific context. A fire sprinkler makes you secure from fires, not burglars. A burglar alarm makes you more secure, but it makes a burglar less secure.

If your boss relies on a disappearing message facility to delete the evidence of his harassing DMs, then enforcing disappearing messages increases his security (to harass you with impunity) but it decreases your security (to report him to a workplace regulator or the police).

One thing that I hope the DMA will enable is interoperable messenging clients that allow you to override the disappearing message bits: “Cory Doctorow prefers that this message be deleted in one hour [OK] [OVERRIDE].”

In other words, for years, the absence of an interoperability framework let us conflate the automation of disappearing message agreements with their enforcement. If the DMA forces us to recognize that all we can and should hope for is automation, that is a feature, not a bug.

Cory Doctorow (craphound.com) is a science fiction author, activist, and blogger. He has a podcast, a newsletter, a Twitter feed, a Mastodon feed, and a Tumblr feed. He was born in Canada, became a British citizen and now lives in Burbank, California. His latest nonfiction book is How to Destroy Surveillance Capitalism. His latest novel for adults is Attack Surface. His latest short story collection is Radicalized. His latest picture book is Poesy the Monster Slayer. His latest YA novel is Pirate Cinema. His latest graphic novel is In Real Life. His forthcoming books include Chokepoint Capitalism: How to Beat Big Tech, Tame Big Content, and Get Artists Paid (with Rebecca Giblin), a book about artistic labor market and excessive buyer power; Red Team Blues, a noir thriller about cryptocurrency, corruption and money-laundering (Tor, 2023); and The Lost Cause, a utopian post-GND novel about truth and reconciliation with white nationalist militias (Tor, 2023).